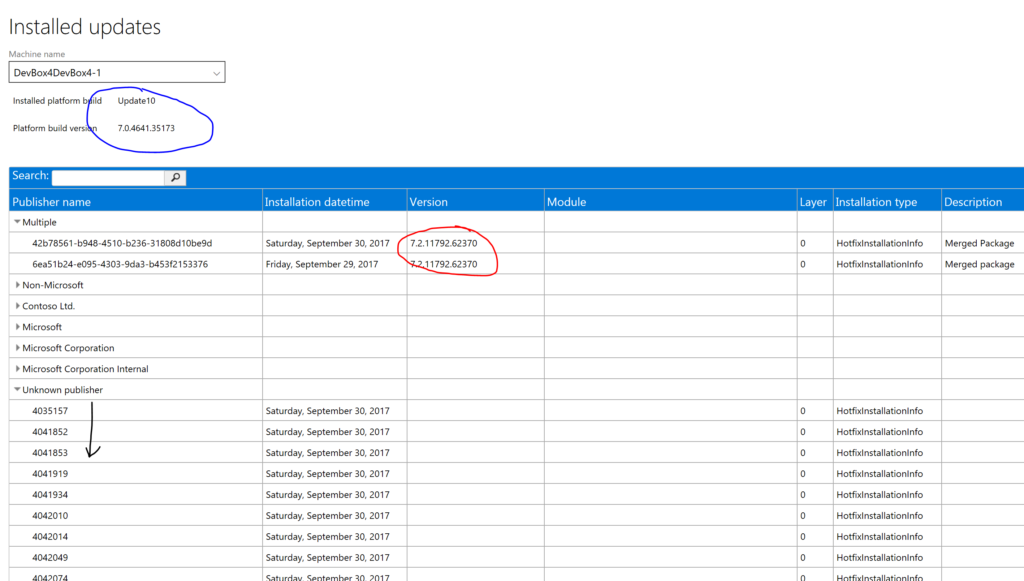

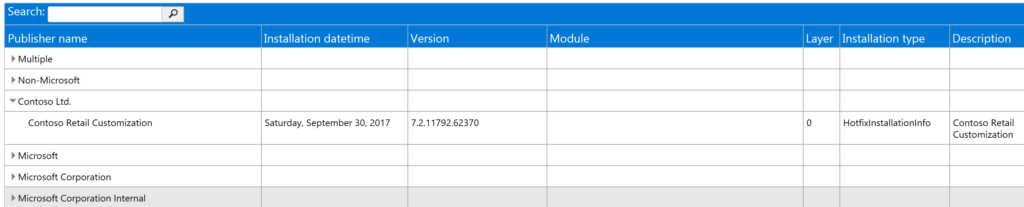

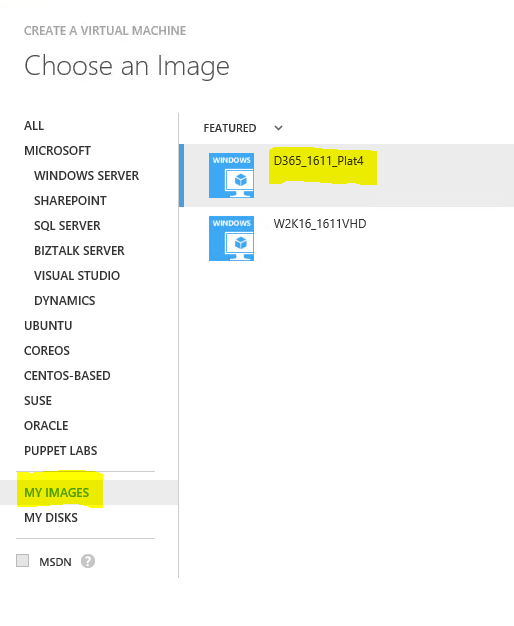

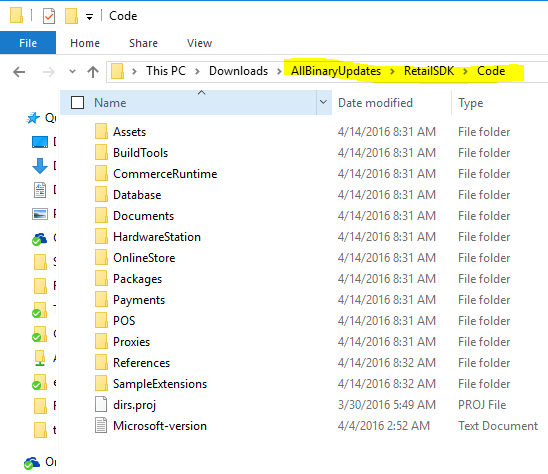

Note: Implemented with Dynamics 365 version 7.2.11792.62628, or App Update 4 (may not work with lower versions). Sample code can be downloaded at the end of this blog.

A frequent customization need is to store more transactional data in the channel database in order to synchronize it back to HQ. There is a list of tables that holds these data, i.e. RetailTransactionTable, RetailTransactionSalesTrans, RetailTransactionTaxTrans tables, and a few others.

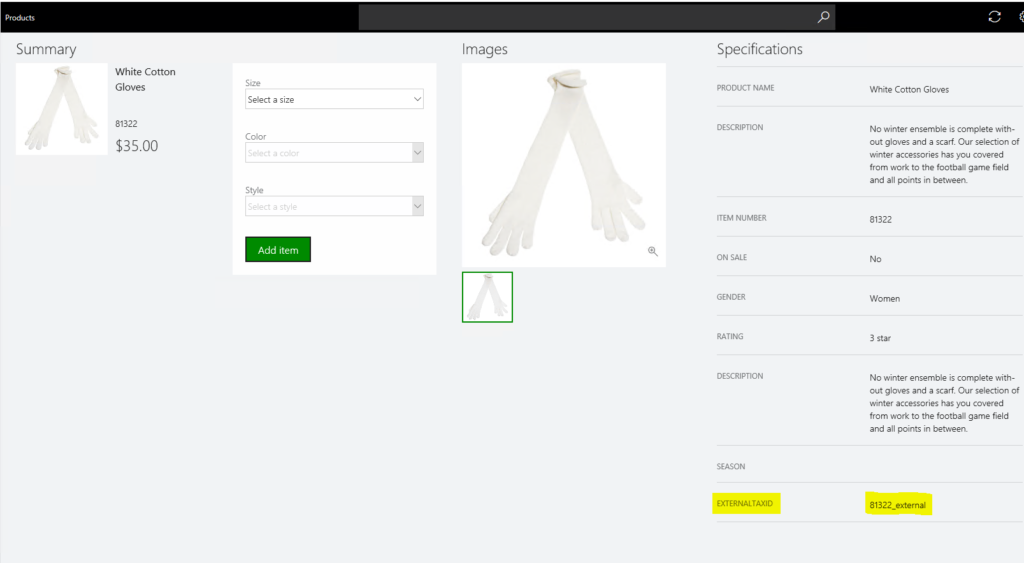

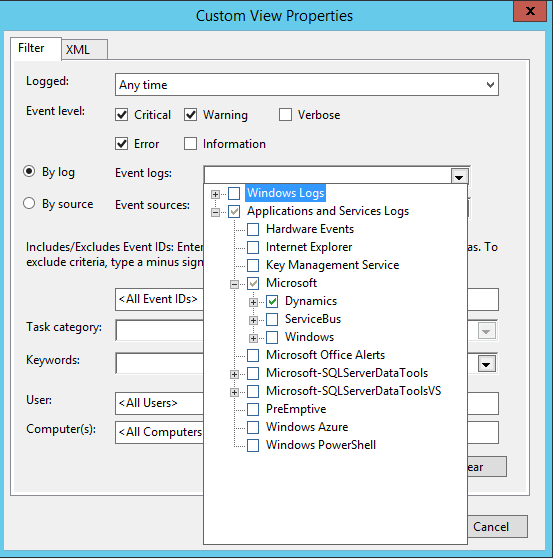

In this example, I am going to show how to extend the RetailTransactionTaxTrans table, how to write to it from a CRT data service, how to create a view to sync it back to AX and how to configure CDX. The single string that the tax line is extended with, is being passed in by means of an CRT Extension property. This sample is part of a larger sample to override the CRT’s tax calculation.

Lets work on the pieces from bottom up to the top.

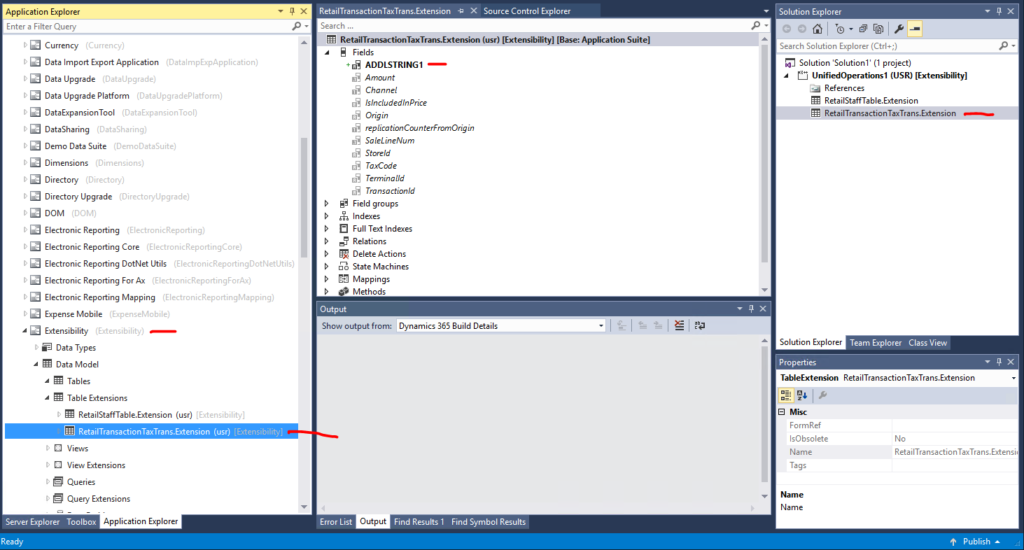

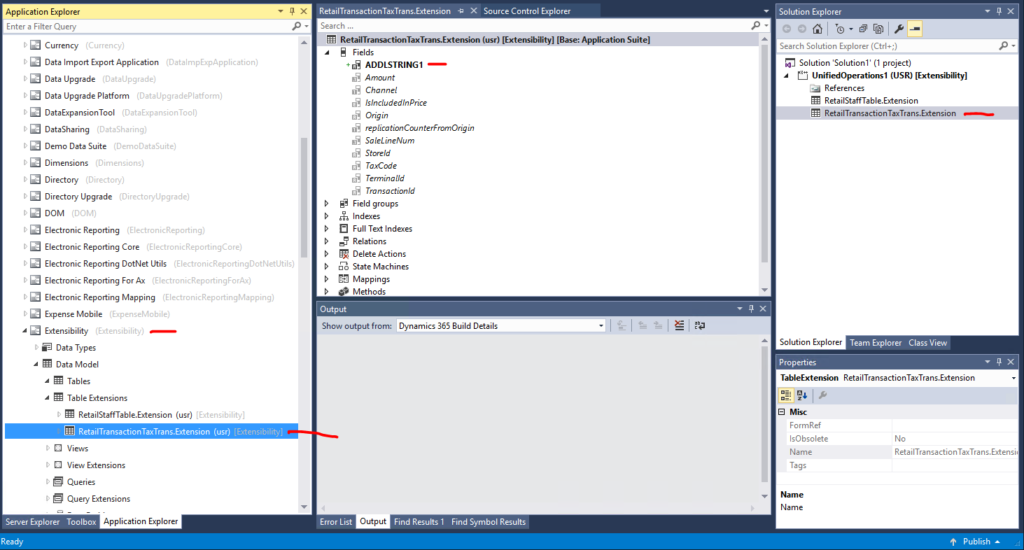

HQ table extension (X++)

Build an X++ table extension for the table RetailTransactionTaxTrans by use of the AOT and Visual Studio. Before you do that, create a new Dynamics 365 package and model. In the screenshot below you can see I created a package called “Extensibility” and a model called “Extensibility” and added a new string column called ADDLSTRING1.

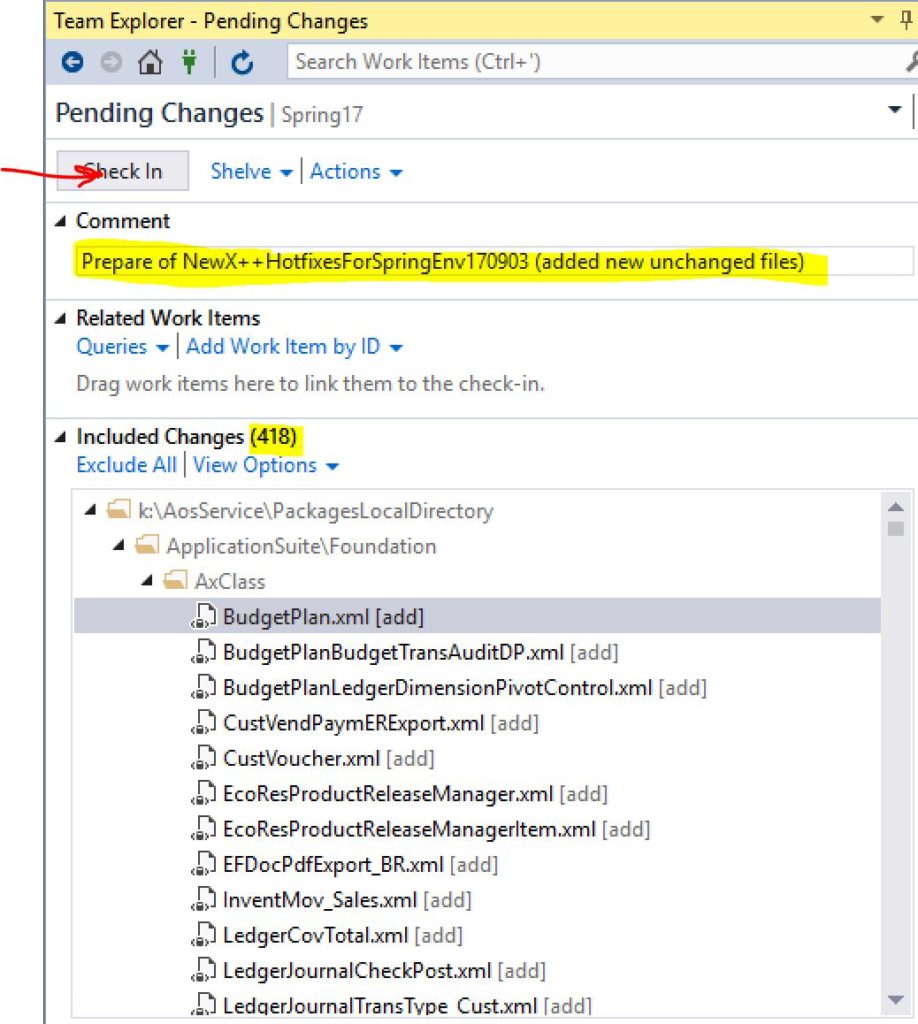

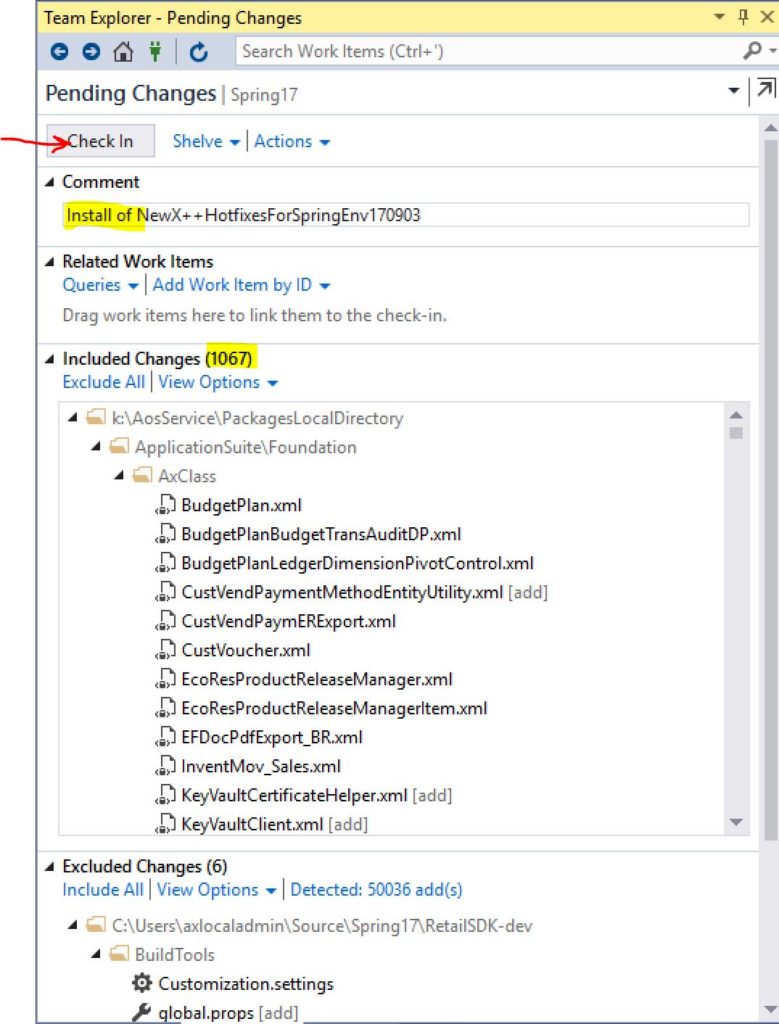

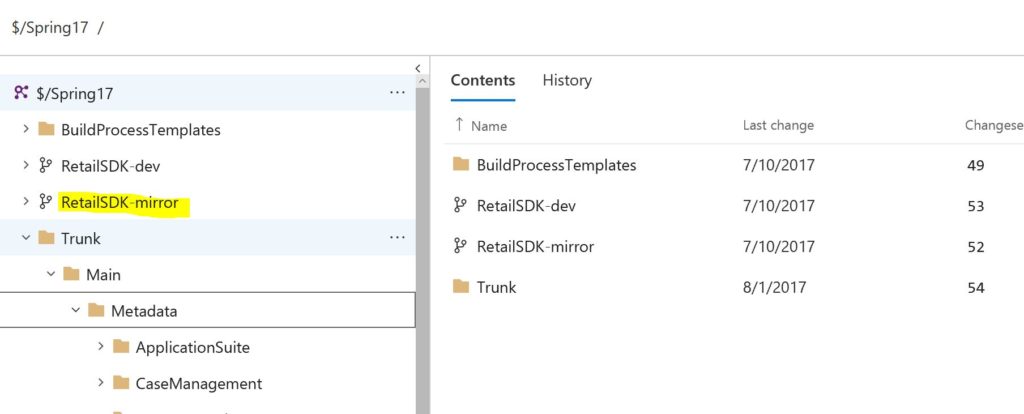

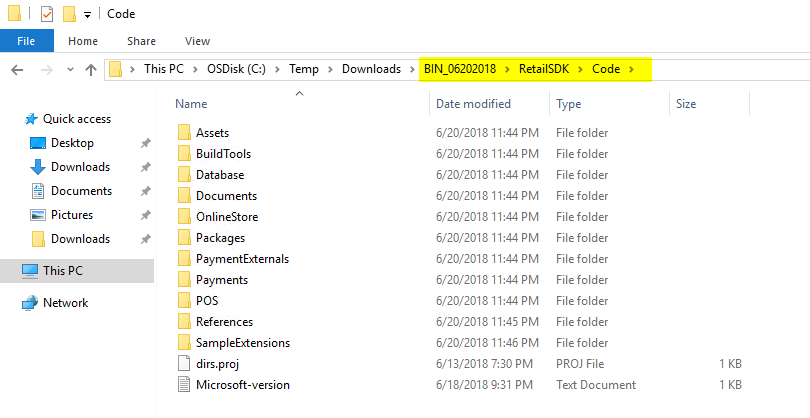

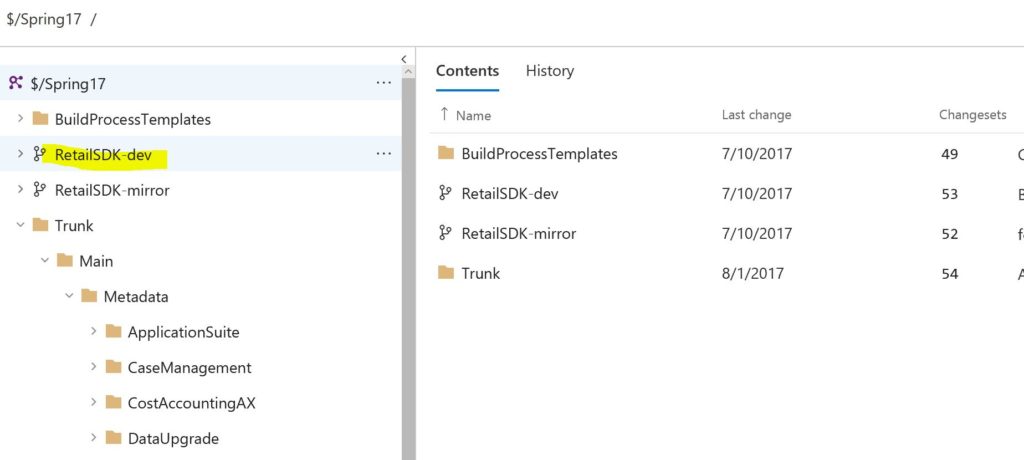

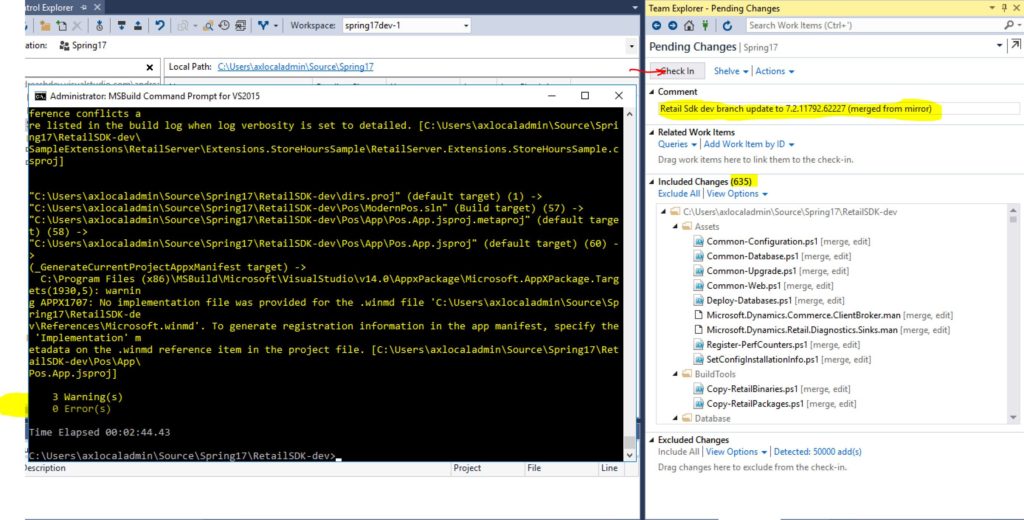

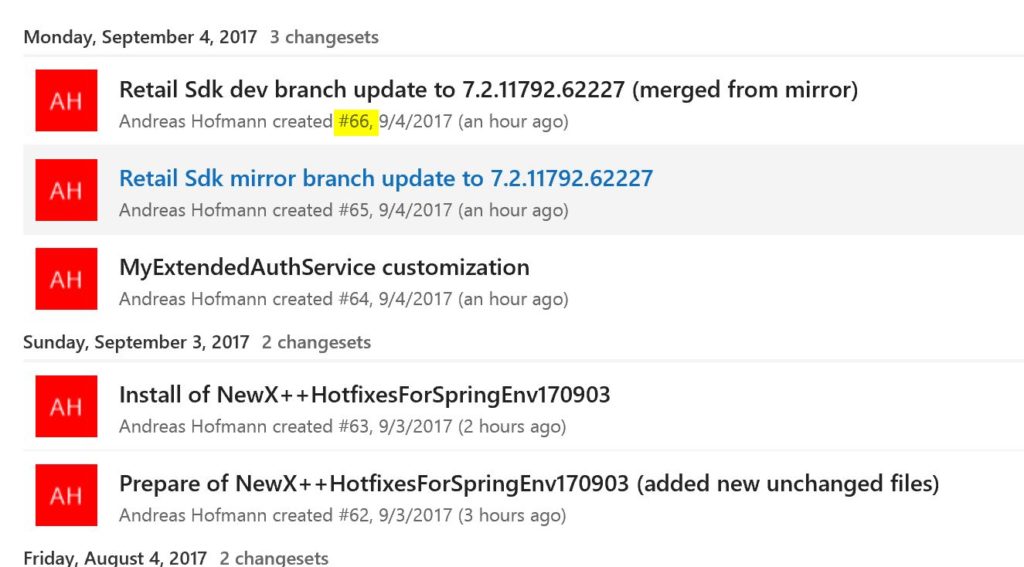

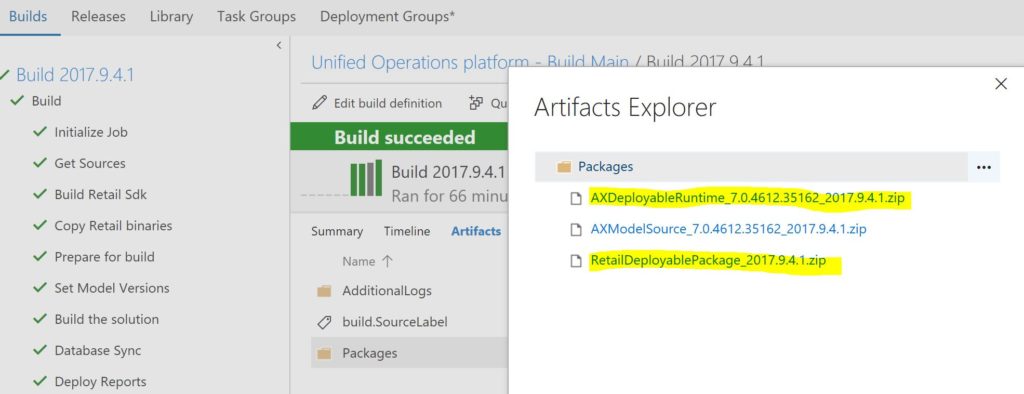

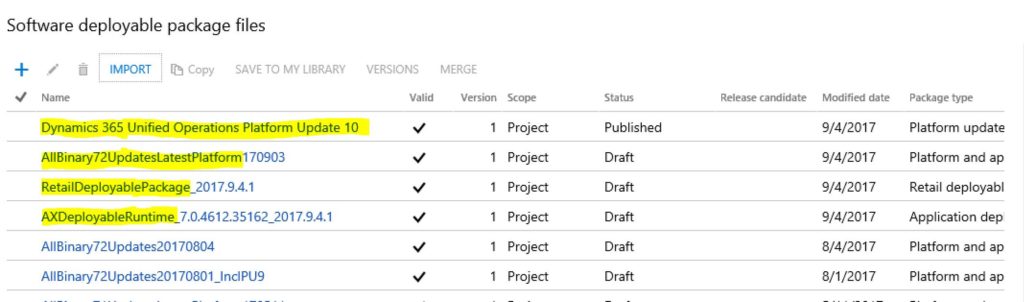

Make sure to build and db sync, and when all looks good to add the new package to VSTS.

Channel database extension (SQL)

Since we want to get the data from the channel to the HQ, we must store it initially in the channel (or even in the ModernPOSOffline db). In order to do that, we must develop some SQL for the channel database.

The new way for doing this is to create any new SQL on the db schema “[ext]” and NOT in any preexisting schemas, i.e. [ax], [dbo], [crt]. At this point you can reference Microsoft’s schemas, but we careful to not take too many dependencies. SQL objects cannot be considered a real interface, so the more assumptions your SQL makes on Microsoft’s SQL, the higher the probability you may need to fix something someday when taking an update. In the SQL I show here, you will see that all the new SQL objects live in the [ext] schema.

New table

We need to store the new column in a new table. Since, in this sample we want to store one more string value, we must at least have this new column in the table, plus all the other columns that allow us to reference the corresponding record in the original table (base table). The correct approach is to add all PK columns from the original table to the new table as well. In addition, it is a good practice (but not required) to add columns for creation and modification time.

Also, make sure that the PK on the new table is the same as on the original one. This is needed so CDX can find the data correctly.

Lastly, we need to provide CRUD privileges on the table to the DataSyncUsersRole (CDX) and insert privileges to the UsersRole (CRT process).

CREATE TABLE [ext].[RETAILTRANSACTIONTAXTRANS](

[CHANNEL] [bigint] NOT NULL,

[SALELINENUM] [numeric](32, 16) NOT NULL,

[STOREID] [nvarchar](10) NOT NULL,

[TAXCODE] [nvarchar](10) NOT NULL,

[TERMINALID] [nvarchar](10) NOT NULL,

[TRANSACTIONID] [nvarchar](44) NOT NULL,

[DATAAREAID] [nvarchar](4) NOT NULL,

[CREATEDDATETIME] [datetime] NOT NULL,

[MODIFIEDDATETIME] [datetime] NOT NULL,

[ROWVERSION] [timestamp] NOT NULL,

[ADDLSTRING1] [nvarchar](200) NOT NULL DEFAULT (''),

CONSTRAINT [I_EXT_RETAILTRANSACTIONTAXTRANS_PK] PRIMARY KEY NONCLUSTERED

(

[CHANNEL] ASC,

[TERMINALID] ASC,

[STOREID] ASC,

[TRANSACTIONID] ASC,

[SALELINENUM] ASC,

[TAXCODE] ASC,

[DATAAREAID] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

END

GO

GRANT SELECT,INSERT,UPDATE,DELETE ON [ext].[RETAILTRANSACTIONTAXTRANS] TO [DataSyncUsersRole]

GRANT INSERT ON [ext].[RETAILTRANSACTIONTAXTRANS] TO [UsersRole]

GO

New view

Consider that the view should represent the exact same columns as the original table before, plus the new column. As explained already above, we have the same PK on both tables, so we will use these to LEFT JOIN the two.

Grant the priviledge to SELECT by the DataSyncUsersRole (CDX).

CREATE VIEW [ext].[RETAILTRANSACTIONTAXTRANSEXTVIEW] AS

(

SELECT

tt.[AMOUNT],

tt.[CHANNEL],

tt.[ISINCLUDEDINPRICE],

tt.[REPLICATIONCOUNTERFROMORIGIN],

tt.[SALELINENUM],

tt.[STOREID],

tt.[TAXCODE],

tt.[TERMINALID],

tt.[TRANSACTIONID],

tt.[DATAAREAID],

tt.[ROWVERSION],

exttt.[ADDLSTRING1],

exttt.MODIFIEDDATETIME,

exttt.CREATEDDATETIME

from [ax].RetailTransactionTaxTrans tt

LEFT JOIN [ext].[RETAILTRANSACTIONTAXTRANS] exttt

ON

tt.[CHANNEL] = exttt.[CHANNEL] AND

tt.[TERMINALID] = exttt.[TERMINALID] AND

tt.[STOREID] = exttt.[STOREID] AND

tt.[TRANSACTIONID] = exttt.[TRANSACTIONID] AND

tt.[SALELINENUM] = exttt.[SALELINENUM] AND

tt.[TAXCODE] = exttt.[TAXCODE] AND

tt.[DATAAREAID] = exttt.[DATAAREAID]

)

GO

GRANT SELECT ON [ext].[RETAILTRANSACTIONTAXTRANSEXTVIEW] TO [DataSyncUsersRole]

GO

New table type (TVP)

A table value type is a convenient way to “pass around a data table”. Eventually, we want to call a stored procedure and pass in a whole data table in order to be more efficient. An alternative would be to just pass in the individual parameters for each column and call the stored procedure multiple times. In this sample, since we want to create tax lines, we will create usually a few rows in one call (5 sales items with 2 different tax codes each would produce 10 rows). I created the TVP by copying the original one, and expanded it with the new column.

CREATE TYPE [crt].[EXTRETAILTRANSACTIONTAXTRANSTABLETYPE] AS TABLE(

[DATAAREAID] [nvarchar](4) NULL,

[SALELINENUM] [numeric](32, 16) NULL,

[STOREID] [nvarchar](10) NULL,

[TAXCODE] [nvarchar](10) NULL,

[TERMINALID] [nvarchar](10) NULL,

[TRANSACTIONID] [nvarchar](44) NULL,

[ADDLSTRING1] [nvarchar](200) NOT NULL)

GO

GRANT EXECUTE ON TYPE::[crt].[EXTRETAILTRANSACTIONTAXTRANSTABLETYPE] TO [UsersRole];

GO

New stored procedure (sproc)

The sproc will get supplied by the TVP and the channel Id. This sproc will be called by our CRT data service below.

CREATE PROCEDURE [ext].[INSERTRETAILTRANSACTIONTAXTRANS]

(@bi_ChannelId bigint,

@tvp_ExtRetailTransactionTaxTrans [crt].[EXTRETAILTRANSACTIONTAXTRANSTABLETYPE] READONLY)

AS

BEGIN

...

CRT data service to write to the new table

Each CRT service (for data or other purposes) requires a Request class. In this example, we want to store additional tax line data from the transaction. So we can pass the SalesTransaction instance to our service via the Request.

public sealed class InsertTaxTransExtensionDataRequest : DataRequest

{

public InsertTaxTransExtensionDataRequest(SalesTransaction transaction)

{

this.Transaction = transaction;

}

[DataMember]

[Required]

public SalesTransaction Transaction { get; private set; }

}

The data service must find the data we want to store and build a DataTable with multiple DataRows that corresponds to the TVP in SQL. Make sure the columns are in the same order. Then we use the DatabaseContext class to make the call to the sproc, by passing in the expected parameters.

using (DataTable taxTable = new DataTable("EXTRETAILTRANSACTIONTAXTRANSTABLETYPE"))

{

taxTable.Columns.Add(DataAreaIdColumn, typeof(string)).DefaultValue = string.Empty;

taxTable.Columns.Add(SaleLineNumColumn, typeof(decimal)).DefaultValue = 0m;

taxTable.Columns.Add(StoreIdColumn, typeof(string)).DefaultValue = string.Empty;

taxTable.Columns.Add(TaxCodeColumn, typeof(string)).DefaultValue = string.Empty;

taxTable.Columns.Add(TerminalIdColumn, typeof(string)).DefaultValue = string.Empty;

taxTable.Columns.Add(TransactionIdColumn, typeof(string)).DefaultValue = string.Empty;

taxTable.Columns.Add(ADDLSTRING1Column, typeof(string)).DefaultValue = string.Empty;

foreach (var line in tx.ActiveSalesLines)

{

foreach (var taxItem in line.TaxLines)

{

DataRow row = taxTable.NewRow();

row[DataAreaIdColumn] = request.RequestContext.GetChannelConfiguration().InventLocationDataAreaId;

row[SaleLineNumColumn] = line.LineNumber;

row[StoreIdColumn] = tx.StoreId;

row[TaxCodeColumn] = taxItem.TaxCode;

row[TerminalIdColumn] = tx.TerminalId;

row[TransactionIdColumn] = tx.Id;

object oAddlString = taxItem.GetProperty("AdditionalString1");

string addlString = string.Empty;

if (oAddlString != null)

{

addlString = (string)oAddlString;

}

row[ADDLSTRING1Column] = addlString;

taxTable.Rows.Add(row);

}

}

ParameterSet parameters = new ParameterSet();

parameters[ChannelIdVariableName] = request.RequestContext.GetPrincipal().ChannelId;

parameters[TVPVariableName] = taxTable;

int errorCode;

using (var databaseContext = new SqlServerDatabaseContext(request))

{

errorCode = databaseContext.ExecuteStoredProcedureNonQuery("[ext].INSERTRETAILTRANSACTIONTAXTRANS", parameters);

}

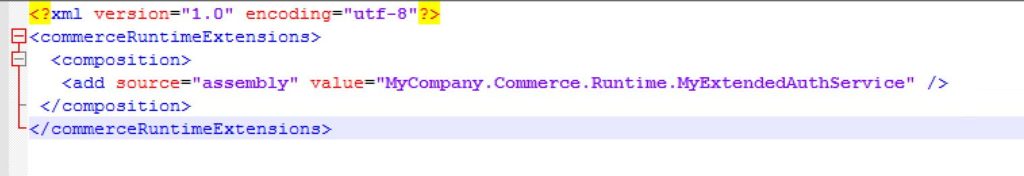

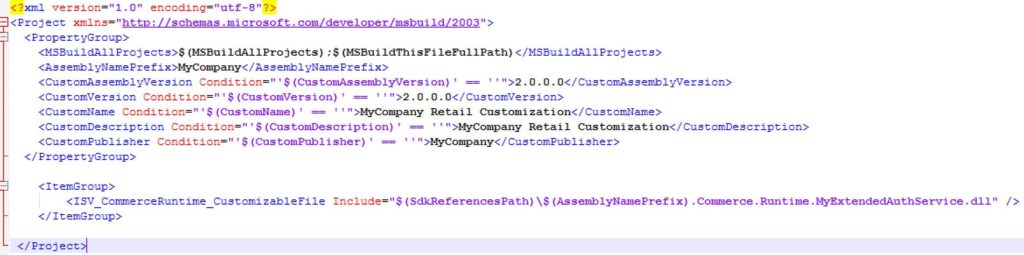

Finally, register this CRT data service with the CRT, in the commerceruntime.ext.config (RS) and the commerceruntime.ext.offline.config (MPOS offline).

Implement trigger in the appropriate place to call CRT data service

For our use case, it is perfect to piggy-bag onto the SaveSalesTransactionDataRequest CRT request and provided an implementation for a post-trigger to call our new data service. In that trigger, we initialize the request to our new data service and let the CRT take care of routing the call.

class SaveSalesTransactionDataRequestTrigger : IRequestTrigger

{

public IEnumerable SupportedRequestTypes

{

get

{

return new[] { typeof(SaveSalesTransactionDataRequest) };

}

}

public void OnExecuted(Request request, Response response)

{

ThrowIf.Null(request, "request");

ThrowIf.Null(response, "response");

var newRequest = new InsertTaxTransExtensionDataRequest(((SaveSalesTransactionDataRequest)request).SalesTransaction);

request.RequestContext.Execute(newRequest);

}

public void OnExecuting(Request request)

{

}

}

Do not forget to register the trigger in the commerceruntime.ext.config (RS) and the commerceruntime.ext.offline.config (MPOS offline), similarly as shown above.

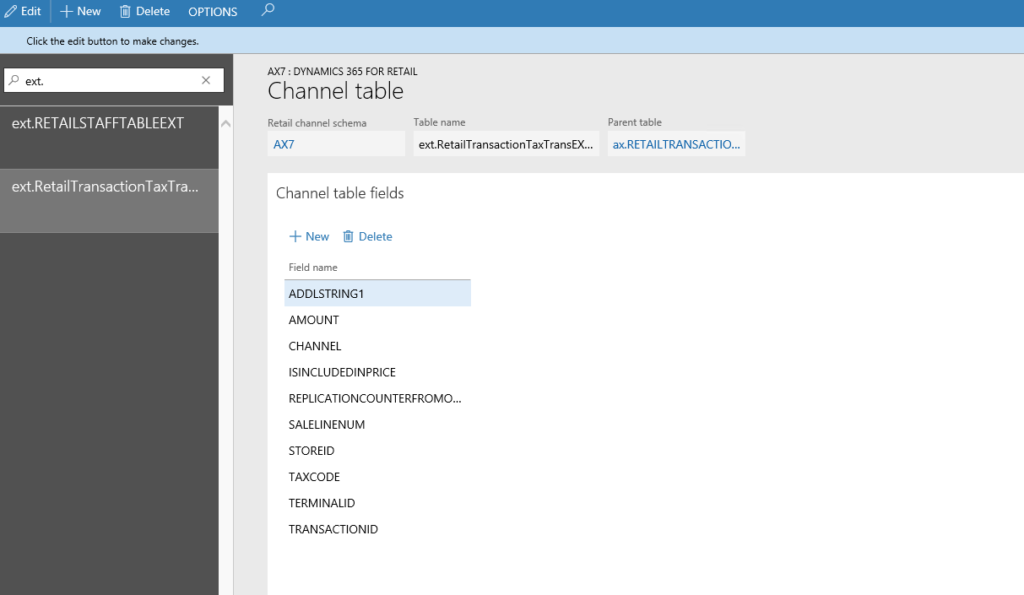

Configure CDX

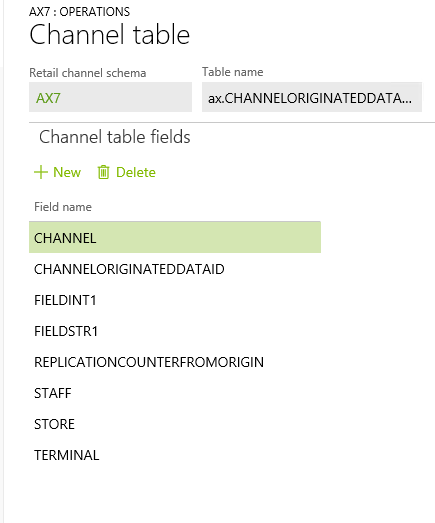

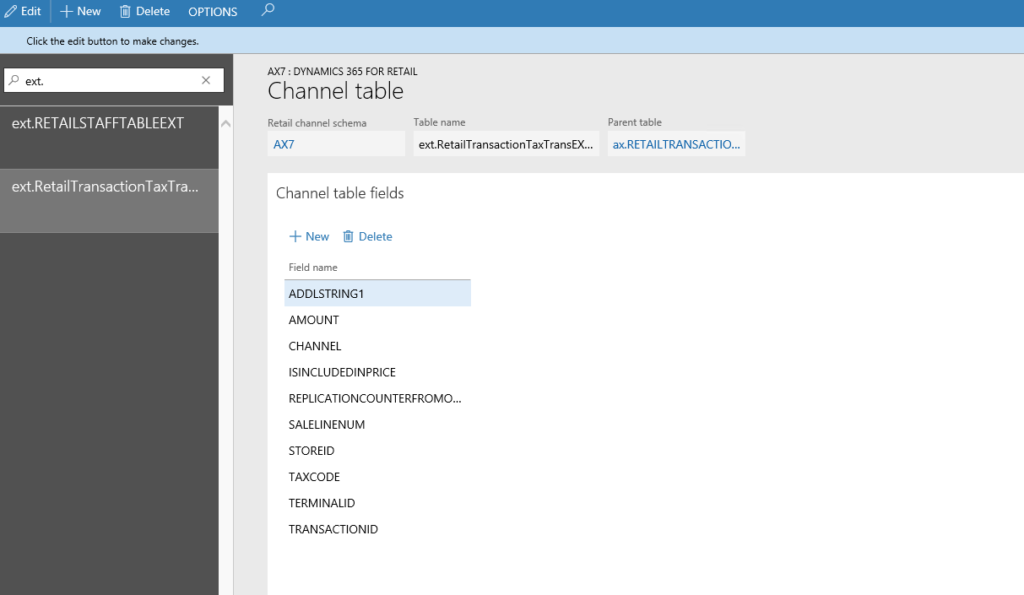

New AX7 schema table

Create a new AX7 schema table called “ext.RetailTransactionTaxTransEXTView” in the “Retail Channel schema” page for AX7 schema. Make sure the same columns are used as in the original table called “ax.RETAILTRANSACTIONTAXTRANS”, plus the new ADDLSTRING1 column.

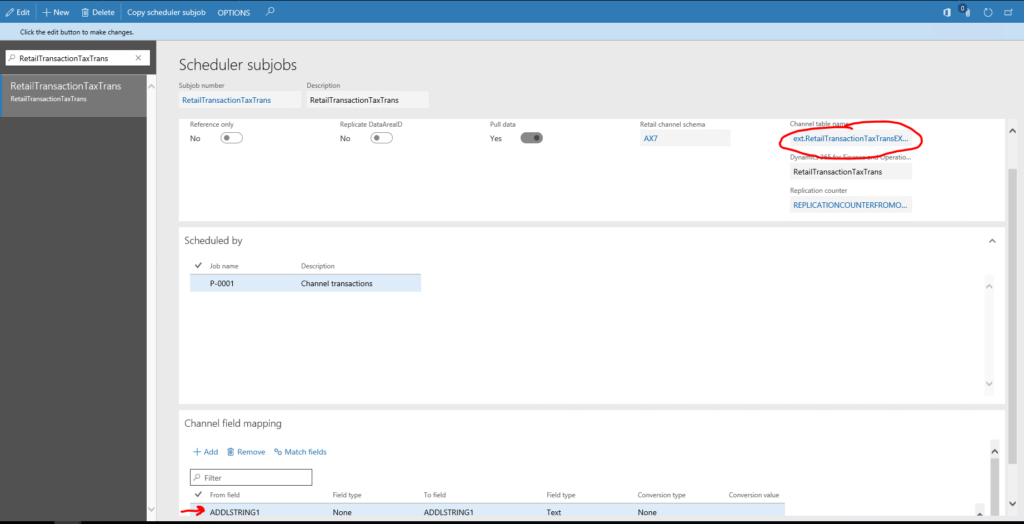

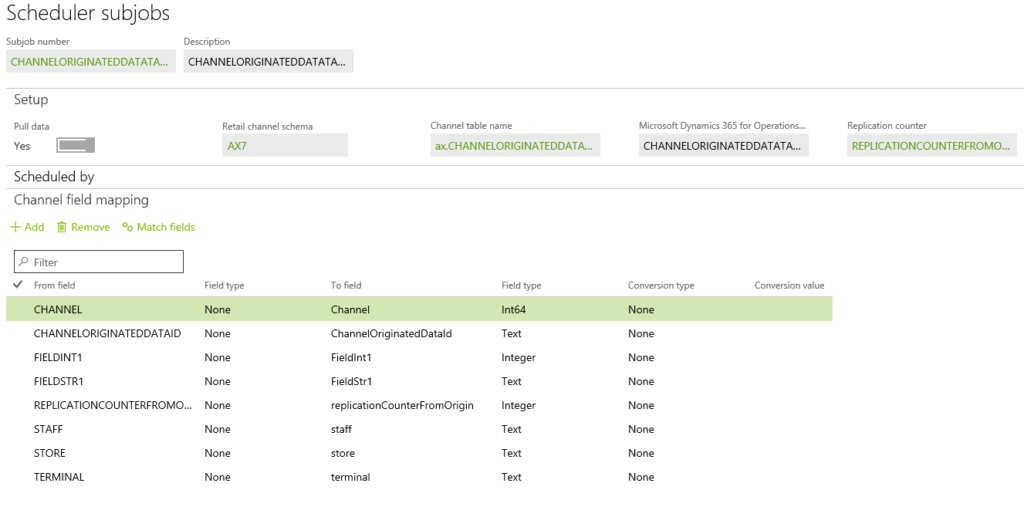

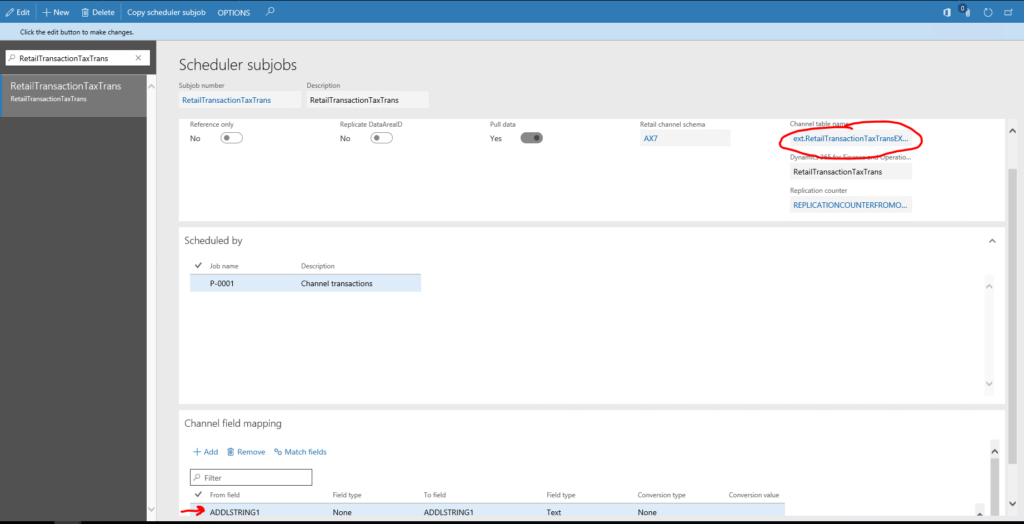

Switch scheduler subjob to use view instead of original table

In “Scheduler subjobs” find the job subjob called “RetailTransactionTaxTrans” and change it table to the newly created one and add the one new column.

Finally, use the “Generate queries” in the “Retail channel schema” to initialize CDX.

Resource file to automatically configure CDX after deployment

Brand new feature, to be continued at a future time…

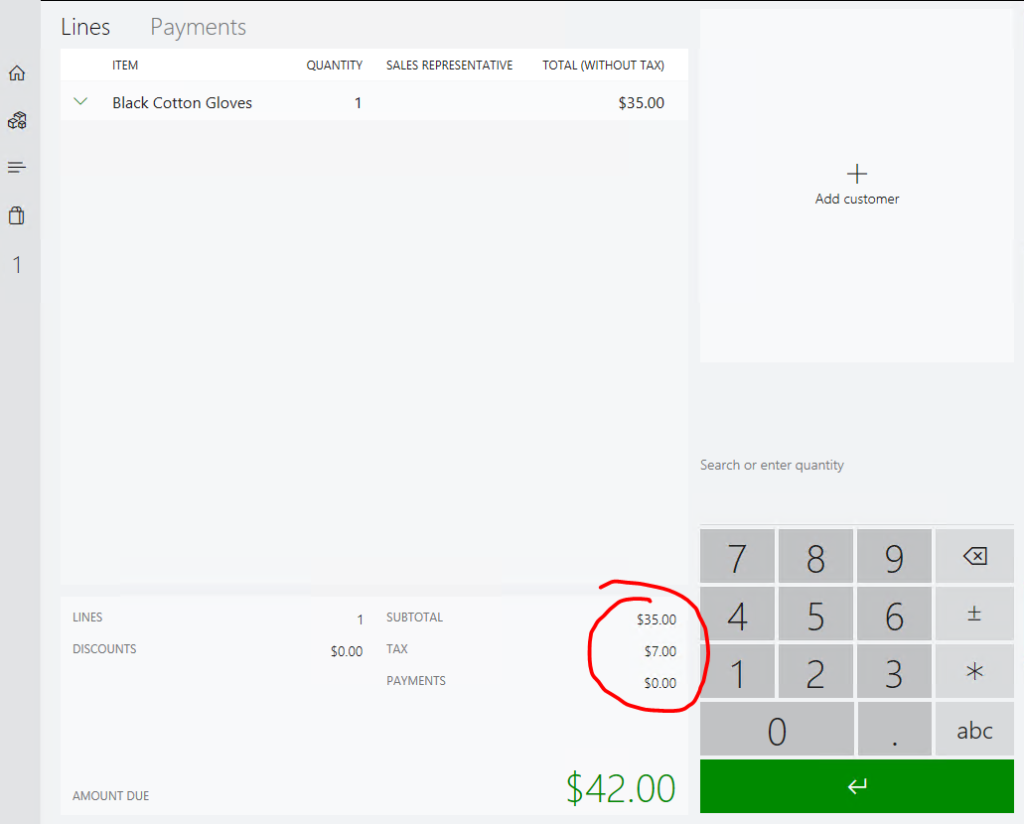

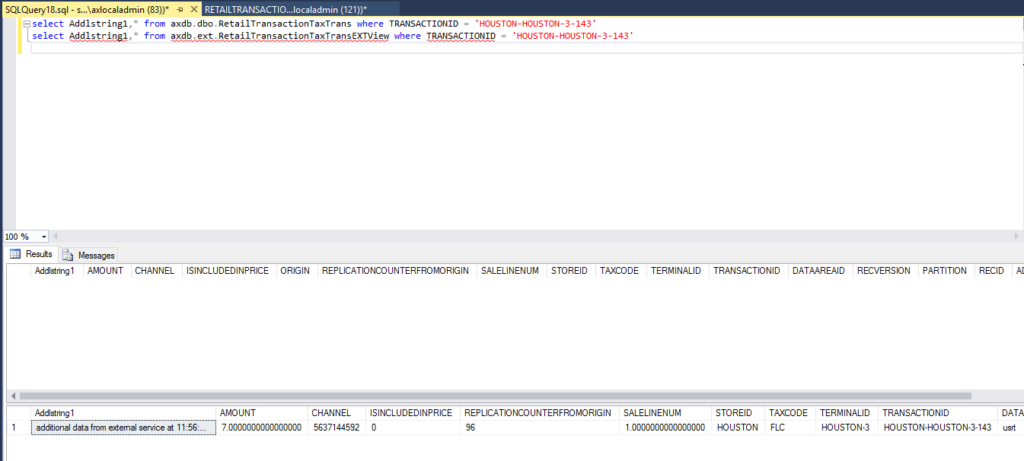

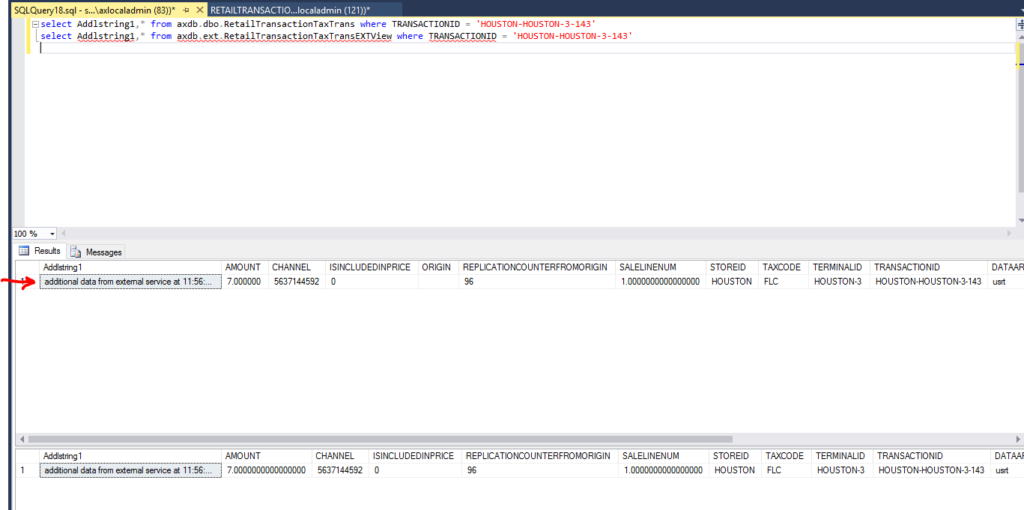

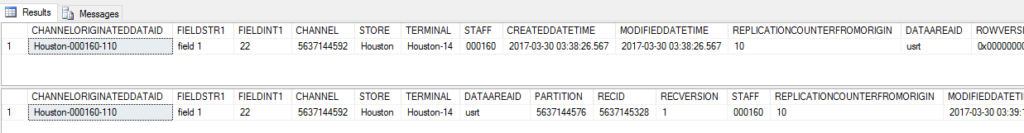

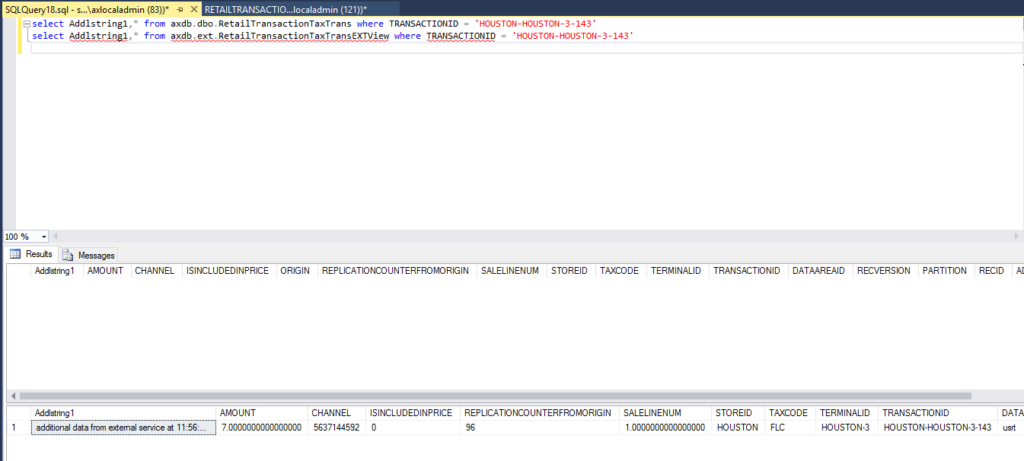

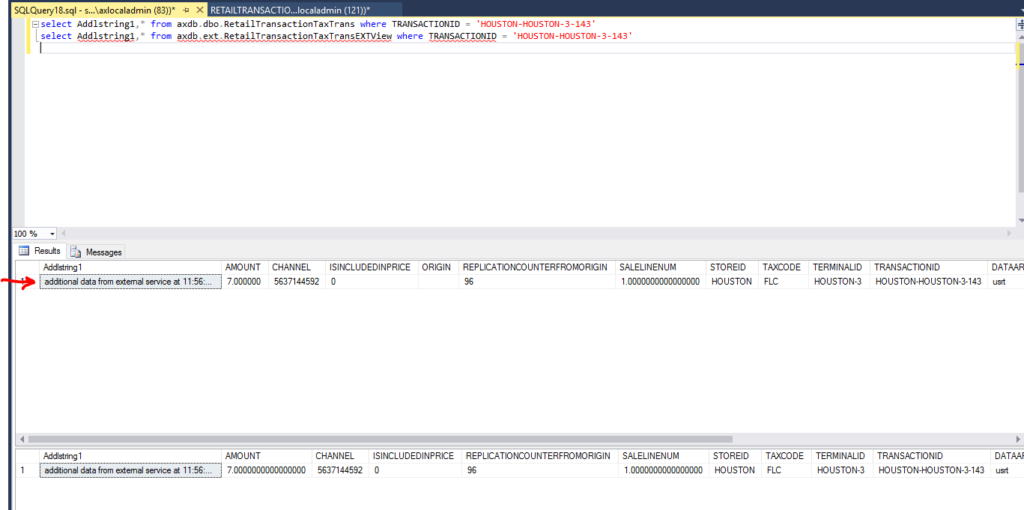

Testing

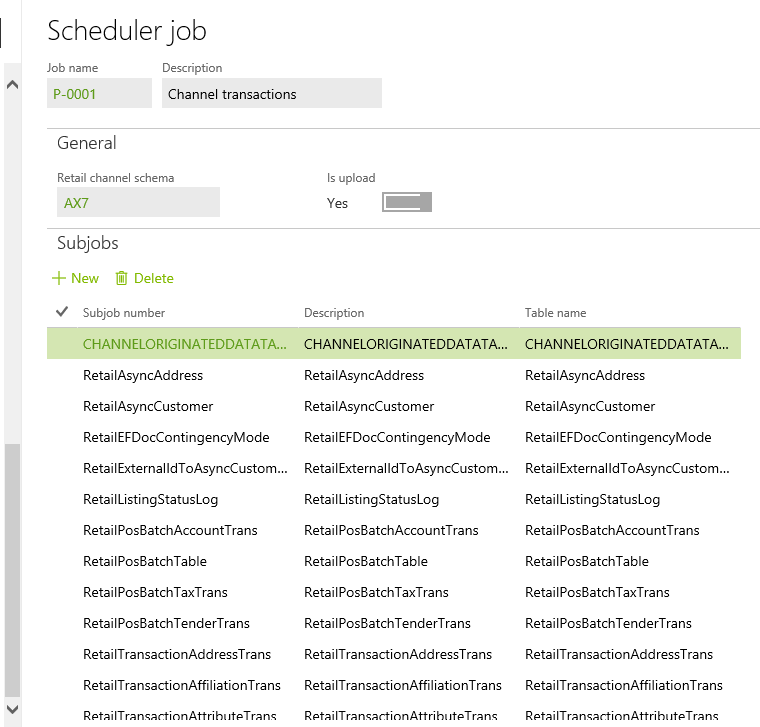

Run through your scenario in your POS client to save a transaction. Then look in the database before and after to see that the extension table gets populated and synced with the P-0001 job.

Extensions.MyTaxTransExtensionDataServiceBlog