Overview

A typical D365 Finance and Operations implementation project usually requires multiple tier-1s developer environments. Each developer needs her own and they are also often used for prototyping, testing, and housing golden configurations. The accumulated cost can be pretty substantial. On the other hand, users often complain that the performance of the default-sized VMs is not great and try to increase the size for better experience.

Cost and performance are hard to get a handle on together. To put it simply, it seems to be an either-or situation. I have asked myself how an optimum can be found. Personally, I would define the optimum to be a very fast user experience when I use the VM at a very small additional cost. I use two approaches that help me. Since I noticed that many struggle with the same issue, I am sharing the two things I do to manage it. However, note that it is a requirement to have administrative privileges for the VM in the Azure portal.

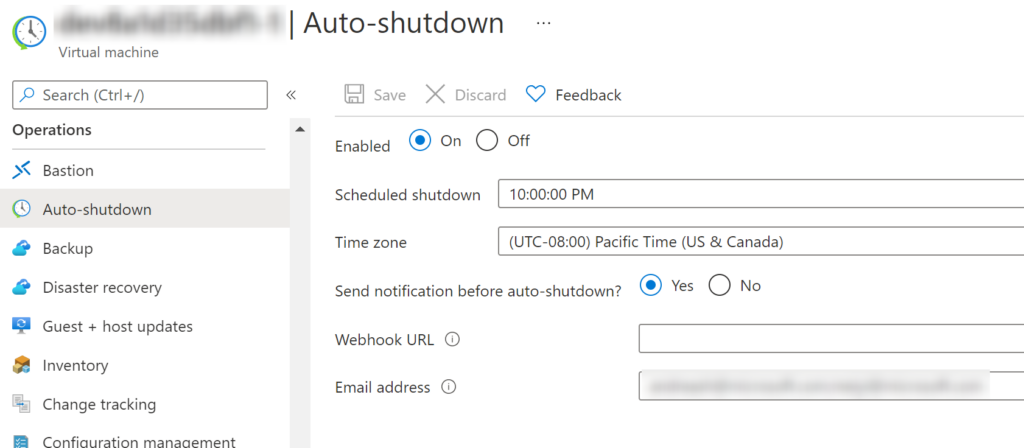

Set up a daily Auto-Shutdown

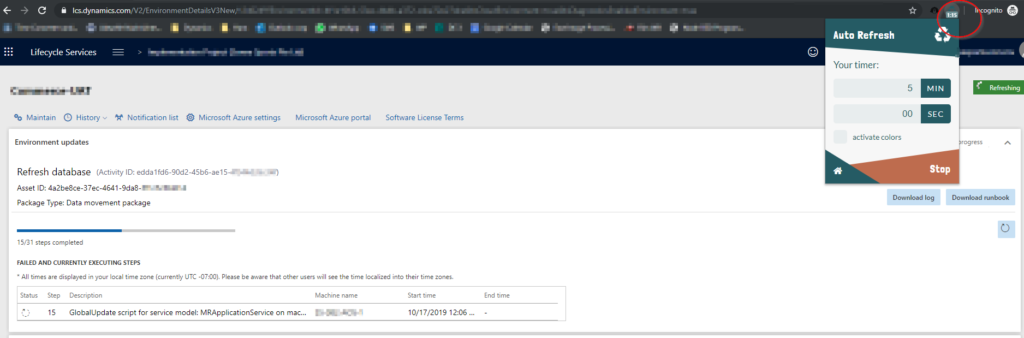

I setup the VM to be shut down automatically every night. I get an email about it 30 mins in advance so I could change my mind in case I am still using the VM. It’s a way to keep the running time of the VM down, in case I forget to shut it down manually. With that setting, the VM has to be explicitly started again, so often I have the VM off for a few days which saves cost. Below is the setting in the Azure portal.

I usually start and stop my VMs manually.

Scale VM and disk sizes up and down

Consider the time a VM is actually running versus when it is stopped (when you do not need it). In my case, the running time is a small percentage. Because of that, I can justify scaling up the sizing at additional cost for the few times I use it.

There is a lot of public documentation about VM sizes and disk sizes and what workloads are better for some than others. I am not repeating this here, but just want to share my approach. I encourage you to experiment and share your comments.

Important note: The disk sizes incur cost even when the VM is stopped. It is not enough to stop a VM, it must be also scaled down. Make sure to understand this!

My Low-cost config for a stopped VM (cheap)

VM size (VM/Settings/Size): Standard B8ms (8 vcpus, 32 GiB memory)

Disk size for all disks (each disk/Settings/Size + performance): Standard HDD LRS

My High-performance config for a started vm (very fast)

VM size (VM/Settings/Size): Standard DS13_v2 (8 vcpus, 56 GiB memory)

Disk size for all disks (each disk/Settings/Size + performance): Premium SSD LRS

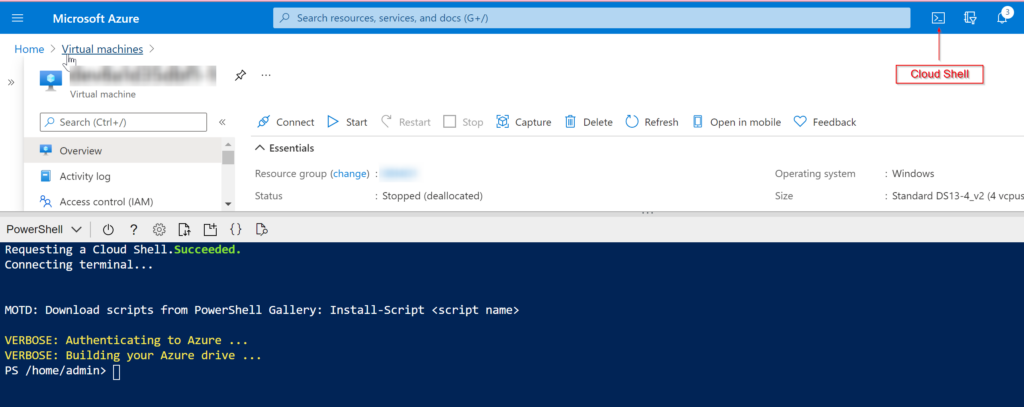

Powershell scripts to scale up and down

I am sharing 2 scripts that take care of the scaling. You can run them right in the Azure portal’s Cloud Shell. Just configure your resource group name and VM name, save the scripts and upload them to the Cloud Shell’s home directory.

When I want to use the VM, I run the scale up script and when the script finished, the VM is started.

When I am done using the VM, I run the scale down script (which also stops the VM).

Scale up script

# start config

$rgName = '****'

$vmName = '****'

# end config

$dataDiskStorageType = 'Premium_LRS'

$vmSize = 'Standard_DS13_v2'

cls

Write-Host 'Scaling up VM ' $vm.Name

Stop-AzVM -ResourceGroupName $rgName -Name $vmName -Force

$vm = Get-AzVM -Name $vmName -resourceGroupName $rgName

$vm.HardwareProfile.VmSize = $vmSize

foreach($dataDiskStorageProfile in $vm.StorageProfile.DataDisks)

{

Set-AzVMDataDisk -VM $vm -Name $dataDiskStorageProfile.Name -Caching ReadWrite

}

Update-AzVM -VM $vm -ResourceGroupName $rgName

$currentFoundDisk = $null

foreach ($disk in Get-AzDisk -ResourceGroupName $rgName )

{

if($disk.ManagedBy -eq $vm.Id)

{

$currentFoundDisk = $disk

Write-Host 'found disk' $disk.Name

$diskUpdateConfig = New-AzDiskUpdateConfig –AccountType $dataDiskStorageType

Update-AzDisk -DiskUpdate $diskUpdateConfig -ResourceGroupName $rgName -DiskName $disk.Name

}

}

Start-AzVM -ResourceGroupName $rgName -Name $vmName

Scale down script

# start config

$rgName = '***'

$vmName = '***'

# end config

$dataDiskStorageType = 'Standard_LRS'

$vmSize = 'Standard_B8ms'

cls

Write-Host 'Scaling down VM ' $vm.Name

Stop-AzVM -ResourceGroupName $rgName -Name $vmName -Force

$vm = Get-AzVM -Name $vmName -resourceGroupName $rgName

$vm.HardwareProfile.VmSize = $vmSize

Update-AzVM -VM $vm -ResourceGroupName $rgName

$currentFoundDisk = $null

foreach ($disk in Get-AzDisk -ResourceGroupName $rgName )

{

if($disk.ManagedBy -eq $vm.Id)

{

$currentFoundDisk = $disk

Write-Host 'found disk ' $disk.Name

$diskUpdateConfig = New-AzDiskUpdateConfig –AccountType $dataDiskStorageType

Update-AzDisk -DiskUpdate $diskUpdateConfig -ResourceGroupName $rgName -DiskName $disk.Name

}

}